We have talked about XR and the reality-virtuality continuum, so now that we already know VR, it is the turn for “what can we do in VR?”. From XR to VR. Light field projections could be a solution.

JAVIER MARTIN

Computer science

We have talked about XR and the reality-virtuality continuum, so now that we already know VR, it is the turn for “what can we do in VR?”.

We have talked about XR and the reality-virtuality continuum, so now that we already know VR, it is the turn for “what can we do in VR?”.

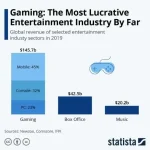

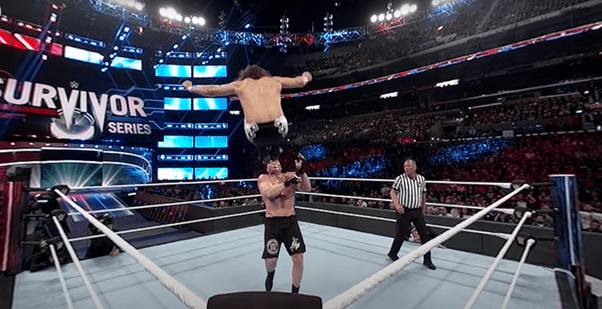

The first use case that comes to mind is probably the Videogame one. However, we must be cautious of undermining it; VR is a new paradigm in an industry (videogames) that is already the main engine of the Entertainment Industry, even growing in the last year to be bigger than movies and North American sports combined.

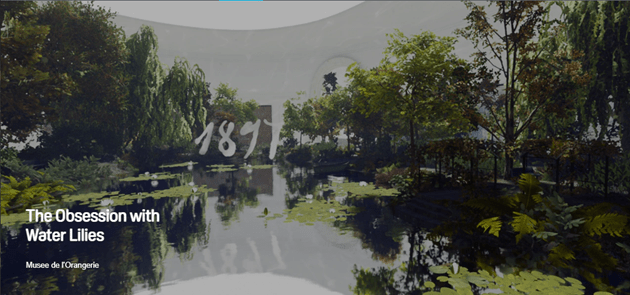

A bit fewer known applications are the Arts related ones, from Virtual tours to the most famous museums in the world to fully integrated VR experiences where the artist uses your environmental perception in ways possible only in VR. Vive even goes so far as to have its own art-oriented study, “VIVE Arts.”

The other side of the coin is also plausible: the art creation inside the VR environment. Although there are multiple tools and ways, one of the most interesting is the project initiated by Google; Tilt brush (now continued as an open project, Open Brush). Tilt created an original form of 3D art, based not on sculpts, meshes, or grids but on brushes whose ink sticks magically on the air.

And we cannot end this brief without talking about 3D, but not any 3D. We already know that when we have a stereo-fueled view, we can offer stereo 3D. But, in VR, we can also add movement to the point of view. This movement means that we see a stereo view for each of the infinite points where we place our head, an Integral 3D. In this matter, Google also has the leading with is “light fields.”

This experience is difficult to explain without trying it, even when probably it is the closest thing you can have to reality. When you capture the light volumetrically, you can show the scene as it was, without the needing of 3D objects or physical light simulation, all of that with a computing cost close to 0.

This experience is difficult to explain without trying it, even when probably it is the closest thing you can have to reality. When you capture the light volumetrically, you can show the scene as it was, without the needing of 3D objects or physical light simulation, all of that with a computing cost close to 0.

The only drawback is its capture, Google made an in-house prototype to capture the light field, but the prototype is expensive, complex and slow…

If they only used a better capturing system…

What do you think @sebi?.